Once I read a blog post from Oona Räisänen that

really inspired me. In her project

she encoded a digital signal into the referee's whistle. I

asked myself if there is any other household object that emits a

radiation and could be used as a carrier for low-speed digital

signal. I looked around and there was a light bulb.

Using light as signal transmitter is not at all a novelty. Beside all that optoelectronics stuff, recently Apple stirred some waves with their Li-Fi announcements that promises ultra-fast communication by means of a light bulb. I had something else in mind. I did not care about the speed, ad-hoc signal transmission of small data chunks that carry location IDs, sensor measurement was much more interesting for me. The use case would look like that you walk with your smartphone into a room lit by an innocently looking light bulb and presto, the smartphone picks up info from the light bulb without any special arrangement, e.g. you don't have to point your device anywhere. Speed and data size is of secondary concern in this use case which is a quite common scenario in the world of Internet of Things (IoT). Also, the light from the light bulb must look completely ordinary for human observer, e.g. no blinking, etc. This post is a tale of that adventure.

As I had prior experience with reading infrared signals from ordinary remote controls and sending them to the smartphone, I started with the method IR remote controls use to communicate with their receivers. Very shortly if you have not yet met this system: the emitter sends out period of "0s" (no light) and "1s" (IR light modulated by a certain frequency, typically 38 kHz). It is the length of these periods that carry the information. The common approach is that after a series of sync bursts, a digital 0 is encoded as a no light-light sequence of specified periods. A digital 1 is encoded similarly, except that the no light-light periods are different. You can observe this operation if you watch the light diode of an ordinary remote control through a smartphone camera. As the smartphone camera sensor "sees" in infrared (even though the manufacturers try to filter out this behaviour), you will see flashes of infrared light if you push a button on the remote.

Compared to the IR remote, we have an additional requirement: the human observer is not allowed to notice that the light bulb is doing something weird. For this purpose, I changed the modulation scheme. "No light" does not mean that the light bulb is switched off (that would result in a very annoying blinking sensation that humans are very sensitive to), only the modulation frequency is changed. In our system, the "light" periods are modulated with 38 kHz so that the popular IR remote decoder chips can be used, and the "no light" periods with 48 kHz. For the steep band pass input filters of those IR receiver chips, 48 kHz is essentially "no light". Considering the very noisy environment in the visible light domain, I also changed a popular IR modulation scheme, now the 0 bit is 564 microsec 48 kHz signal/564 microsec 38 kHz signal, the 1 bit is 1692 microsec 48 kHz signal/564 microsec 38 kHz signal. The whole payload is 20 bit long allowing to transmit a 16-bit value and a 4-bit data type selector. The data type selector lets the emitter send multiple types of data sequentially. In our demo, these are: station ID (for location), temperature and humidity (obtained from a DHT-22 sensor on the emitter board).

The system therefore consists of 3 elements: the emitter circuit that drives the light source, the adapter that receives these light signals and adapts them to the smartphone and finally the smartphone that acts upon those light signals.

And this is how it looks like with the lighting LED beside the microcontroller card.

The source code can be found in this archive, in the light_sender/sketch subdirectory. You have to adapt the ARDUINO_DIR variable and probably the ISP_PROG and the ISP_PORT variables according to your programming tool. The AC input voltage may need to be adapted according to the lighting LED you choose. 38VAC effective value worked well for me for a wide range of lighting LEDs. The input voltage source also powers the microcontroller and this is really a sensitive area, I burnt a Pro Mini by screwing up something here. The problem is the large voltage drop hetween the LED supply voltage and the 3.3V that supplies the microcontroller. Before you insert the Pro Mini into its socket, make sure that VCC is 3.3V by setting TM1. Also, the Q2 FET has to be chosen carefully, the IRL540N type has drain-to-source breakdown voltage of 100V which is more than enough for this application.

The station ID (used for indoor location application) is hardcoded in light_sender.ino (STATION_ID). Optimally, every light bulb should have a different station ID.

Once the emitter is powered, it emits a steady 48 kHz signal which is "no light" in our encoding scheme. Every 1 second the emitter sends an encoded 20-bit value which is sequentially the station ID, temperature and humidity, the last two values are obtained from the DHT-22 sensor (U1). For the human observer, the light bulb is simply lit.

Now on to the adapter. Here is the schematic (click to enlarge).

And here is how it looks like.

The light receiver caused the most trouble for me. For starter, the photodiode required experiments. I played with 5 different diodes and eventually found the Osram SFH203 which worked well for me. If you cannot obtain this type, be prepared that you will also have to experiment. The next source of troubles was the IR receiver. Most IR receivers are integrated with the IR photodiode and these devices are made insensitive to visible light. Eventually I found VSOP58438 which has 2 problems: first it is obsolete and therefore it is hard to get, the second is that it is a 2mmx2mm square. Eventually my colleague helped me out and built a breakout board that you see in the foreground, with the Osram photodiode connected to it.

The rest is simpler. The Atmega328P (also provided in the form of an Arduino Pro Mini board) runs program that was originally designed as IR receiver. It can be found in this archive file (light_receiver/sketch/light_receiver.ino). The receiver library is Chris Young's IRLib with the timings modified for our modulation scheme. If the Atmega328P receives a value, it sends the value out on its serial output which you can observe SER_LIGHT_CODE pin.

The data goes into the nRF51822 SoC that I used in the form of a breakout board (here is an earlier post that describes the board and the development environment). Also, check out this post for instructions, how to compile and upload the project to the board. Here is the archive that contains the code for the nRF51822 SoC. The application in the nRF51822 SoC seems long but it is mostly boilerplate code, in reality its operation is very simple. Whenever it gets a value from the serial port, it writes that value into a BLE characteristic that has notification set. This means that whoever is subscribed to that notification, will get the event immediately, without polling the characteristic.

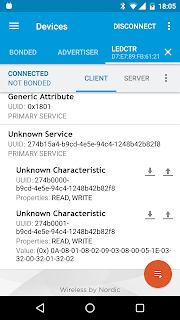

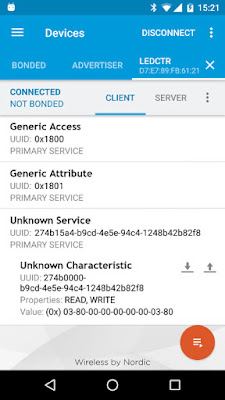

And finally, the reason why this topic is at all on an Android-themed blog: the detected values are consumed by an Android application. Click here to download the source code and read this blog post on how to convert the sources into an Android Studio project. The Android application is very similar with the previous ones, it scans for BLE endpoints with a unique UUID (274b15b0-b9cd-4e5e-94c4-1248b42b82f8 in our case) and when it finds one, connects to the endpoint using connection-oriented BLE. Then it subscribes to the light data characteristic (274b0100-b9cd-4e5e-94c4-1248b42b82f8) and when a new data comes from that characteristic, it evaluates the data type 4 bit and displays the lower 16 bit according to the data type.

Below is a small video showing how the thing works.

Observe the normal light sources and the small spot of smart light source that emits the data. The data is picked up from 3-4 meter distance.

Using light as signal transmitter is not at all a novelty. Beside all that optoelectronics stuff, recently Apple stirred some waves with their Li-Fi announcements that promises ultra-fast communication by means of a light bulb. I had something else in mind. I did not care about the speed, ad-hoc signal transmission of small data chunks that carry location IDs, sensor measurement was much more interesting for me. The use case would look like that you walk with your smartphone into a room lit by an innocently looking light bulb and presto, the smartphone picks up info from the light bulb without any special arrangement, e.g. you don't have to point your device anywhere. Speed and data size is of secondary concern in this use case which is a quite common scenario in the world of Internet of Things (IoT). Also, the light from the light bulb must look completely ordinary for human observer, e.g. no blinking, etc. This post is a tale of that adventure.

As I had prior experience with reading infrared signals from ordinary remote controls and sending them to the smartphone, I started with the method IR remote controls use to communicate with their receivers. Very shortly if you have not yet met this system: the emitter sends out period of "0s" (no light) and "1s" (IR light modulated by a certain frequency, typically 38 kHz). It is the length of these periods that carry the information. The common approach is that after a series of sync bursts, a digital 0 is encoded as a no light-light sequence of specified periods. A digital 1 is encoded similarly, except that the no light-light periods are different. You can observe this operation if you watch the light diode of an ordinary remote control through a smartphone camera. As the smartphone camera sensor "sees" in infrared (even though the manufacturers try to filter out this behaviour), you will see flashes of infrared light if you push a button on the remote.

Compared to the IR remote, we have an additional requirement: the human observer is not allowed to notice that the light bulb is doing something weird. For this purpose, I changed the modulation scheme. "No light" does not mean that the light bulb is switched off (that would result in a very annoying blinking sensation that humans are very sensitive to), only the modulation frequency is changed. In our system, the "light" periods are modulated with 38 kHz so that the popular IR remote decoder chips can be used, and the "no light" periods with 48 kHz. For the steep band pass input filters of those IR receiver chips, 48 kHz is essentially "no light". Considering the very noisy environment in the visible light domain, I also changed a popular IR modulation scheme, now the 0 bit is 564 microsec 48 kHz signal/564 microsec 38 kHz signal, the 1 bit is 1692 microsec 48 kHz signal/564 microsec 38 kHz signal. The whole payload is 20 bit long allowing to transmit a 16-bit value and a 4-bit data type selector. The data type selector lets the emitter send multiple types of data sequentially. In our demo, these are: station ID (for location), temperature and humidity (obtained from a DHT-22 sensor on the emitter board).

The system therefore consists of 3 elements: the emitter circuit that drives the light source, the adapter that receives these light signals and adapts them to the smartphone and finally the smartphone that acts upon those light signals.

- In the prototype I am about to present the emitter is based on an Atmel Atmega328P microcontroller, in the form of an Arduino Pro Mini board.

- The smartphone does not have light receiver and the Android application model cannot do real-time processing anyway so there is an adapter in between that on one side receives and decodes the light signals, on the other side interfaces with the smartphone by means of Bluetooth Low Energy (BLE). This element is implemented with two microcontrollers, an Atmega328P that does the real-time light signal processing and an nRF51822 SoC that deals with the BLE interface. As the nRF51822 is a quite capable ARM Cortex-M0 microcontroller, it is an interesting question why the light signal processing had to be offloaded to another microcontroller. The reason is the bad experiences I had regarding the real-time behaviour of the nRF51822 when its Bluetooth stack is operating.

- The third element is a smartphone that connects to the receiver by means of BLE and displays whatever the adapter receives. This part is implemented in Android.

And this is how it looks like with the lighting LED beside the microcontroller card.

The source code can be found in this archive, in the light_sender/sketch subdirectory. You have to adapt the ARDUINO_DIR variable and probably the ISP_PROG and the ISP_PORT variables according to your programming tool. The AC input voltage may need to be adapted according to the lighting LED you choose. 38VAC effective value worked well for me for a wide range of lighting LEDs. The input voltage source also powers the microcontroller and this is really a sensitive area, I burnt a Pro Mini by screwing up something here. The problem is the large voltage drop hetween the LED supply voltage and the 3.3V that supplies the microcontroller. Before you insert the Pro Mini into its socket, make sure that VCC is 3.3V by setting TM1. Also, the Q2 FET has to be chosen carefully, the IRL540N type has drain-to-source breakdown voltage of 100V which is more than enough for this application.

The station ID (used for indoor location application) is hardcoded in light_sender.ino (STATION_ID). Optimally, every light bulb should have a different station ID.

Once the emitter is powered, it emits a steady 48 kHz signal which is "no light" in our encoding scheme. Every 1 second the emitter sends an encoded 20-bit value which is sequentially the station ID, temperature and humidity, the last two values are obtained from the DHT-22 sensor (U1). For the human observer, the light bulb is simply lit.

Now on to the adapter. Here is the schematic (click to enlarge).

And here is how it looks like.

The light receiver caused the most trouble for me. For starter, the photodiode required experiments. I played with 5 different diodes and eventually found the Osram SFH203 which worked well for me. If you cannot obtain this type, be prepared that you will also have to experiment. The next source of troubles was the IR receiver. Most IR receivers are integrated with the IR photodiode and these devices are made insensitive to visible light. Eventually I found VSOP58438 which has 2 problems: first it is obsolete and therefore it is hard to get, the second is that it is a 2mmx2mm square. Eventually my colleague helped me out and built a breakout board that you see in the foreground, with the Osram photodiode connected to it.

The rest is simpler. The Atmega328P (also provided in the form of an Arduino Pro Mini board) runs program that was originally designed as IR receiver. It can be found in this archive file (light_receiver/sketch/light_receiver.ino). The receiver library is Chris Young's IRLib with the timings modified for our modulation scheme. If the Atmega328P receives a value, it sends the value out on its serial output which you can observe SER_LIGHT_CODE pin.

The data goes into the nRF51822 SoC that I used in the form of a breakout board (here is an earlier post that describes the board and the development environment). Also, check out this post for instructions, how to compile and upload the project to the board. Here is the archive that contains the code for the nRF51822 SoC. The application in the nRF51822 SoC seems long but it is mostly boilerplate code, in reality its operation is very simple. Whenever it gets a value from the serial port, it writes that value into a BLE characteristic that has notification set. This means that whoever is subscribed to that notification, will get the event immediately, without polling the characteristic.

And finally, the reason why this topic is at all on an Android-themed blog: the detected values are consumed by an Android application. Click here to download the source code and read this blog post on how to convert the sources into an Android Studio project. The Android application is very similar with the previous ones, it scans for BLE endpoints with a unique UUID (274b15b0-b9cd-4e5e-94c4-1248b42b82f8 in our case) and when it finds one, connects to the endpoint using connection-oriented BLE. Then it subscribes to the light data characteristic (274b0100-b9cd-4e5e-94c4-1248b42b82f8) and when a new data comes from that characteristic, it evaluates the data type 4 bit and displays the lower 16 bit according to the data type.

Below is a small video showing how the thing works.

Observe the normal light sources and the small spot of smart light source that emits the data. The data is picked up from 3-4 meter distance.